Technology Blog

技術ブログ

2021.12.02

Integrating Machine Learning Models in Unity using NatML

There are currently lots of applications for machine learning, ranging from image analysis to self-driving cars. You might even want to use the benefits of machine learning in your games or AR applications. While you can use web services to outsource this feature to some web server on the internet, there might be cases where you would want the machine learning functionality directly integrated in your standalone application, usable on all kinds of devices.

Due to the nature of Unity, it can sometimes become a bit cumbersome to integrate certain functionalities into your application. While even mobile platforms support the use of machine learning with specialized native services like ML Kit on Android and Core ML on iOS, accessing these features might require a lot of platform dependent integration effort. Some years ago, there was a bunch of projects tackling this integration of machine learning into Unity, especially with mobile devices and their respective AR capabilities as build targets. However, when trying to use some of these with recent versions of iOS, I failed to make them work due to among others many deprecated features and issues that seemed to be caused by changes in APIs.

While searching for a decent solution, I stumbled across a thing called NatML. NatML is a very recent cross-platform machine learning runtime made especially for the Unity Engine. By using NatML, it is possible to integrate machine learning features into your Unity application using a unified API and build your application for any common Unity target platform including iOS, macOS, Android and Windows. NatML fully supports the ONNX model standard and even takes advantage of hardware machine learning accelerators across different platforms.

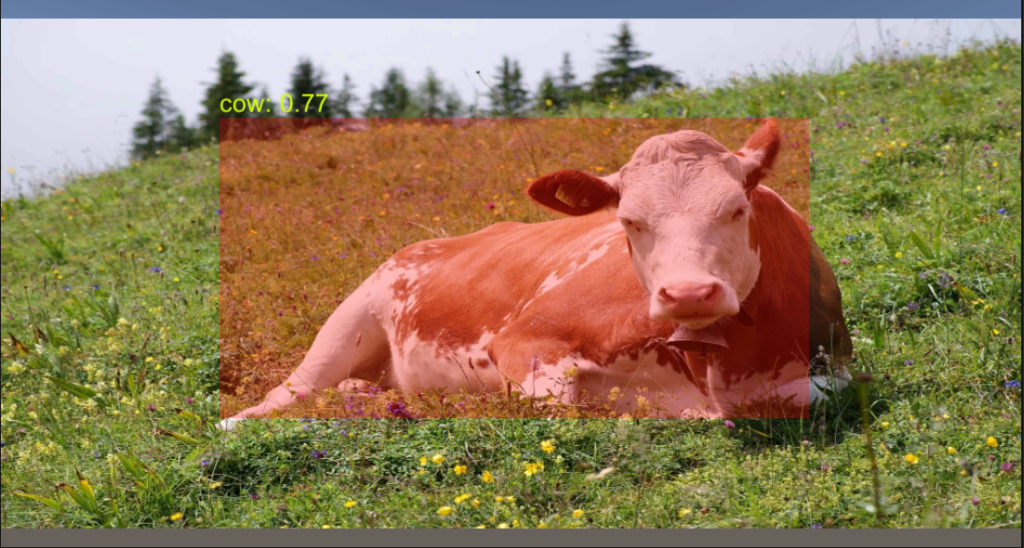

In this blog post, I will be showing a simple example of how to get started with NatML. Our goal will be to detect cows in single images by using the already available tiny-yolo-v3 model.

Installing NatML in your Unity Project

The first step to using NatML is to add and install the necessary NatML runtime dependency in your Unity project. You can either follow the instructions on the project’s GitHub page or directly add the following to your project’s Packages/manifest.json file:

{

"scopedRegistries": [

{

"name": "NatSuite Framework",

"url": "https://registry.npmjs.com",

"scopes": ["api.natsuite"]

}

],

"dependencies": {

"api.natsuite.natml": "1.0.5"

}

}The above will both specify the required NatML dependency, as well as reference an additional registry where the respective NatML dependency can be found. This is necessary, since at this point NatML seems to not be available in the Unity package registry, but rather in the official npm registry.

After adding the NatML runtime to your project, you are already set to integrate any ONNX model into your application.

Integrating a Machine Learning Model

There are currently three ways of integrating a machine learning model into your Unity project:

- downloading the model from the NatML Hub on-the-fly during application runtime

- loading the model from a file during runtime

- loading the model from the streaming assets

Each approach comes with a respective method for loading the model data into memory, where it can then be used with a common API.

// Choose one of these three to load the model data

var modelData = await MLModelData.FromHub("@natsuite/tiny-yolo-v3");

var modelData = await MLModelData.FromFile("/path/to/tiny-yolo-v3.onnx");

var modelData = await MLModelData.FromStreamingAssets("tiny-yolo-v3.onnx");

// Create the actual model instance

var model = modelData.Deserialize();About NatML Hub

NatML Hub is a website that was made to host ONNX models for you to use in your application. Given that you can download the models required by your application at any given time, you will not need to include your model data into the build of your Unity application. You can just download the models you need, when you need them.

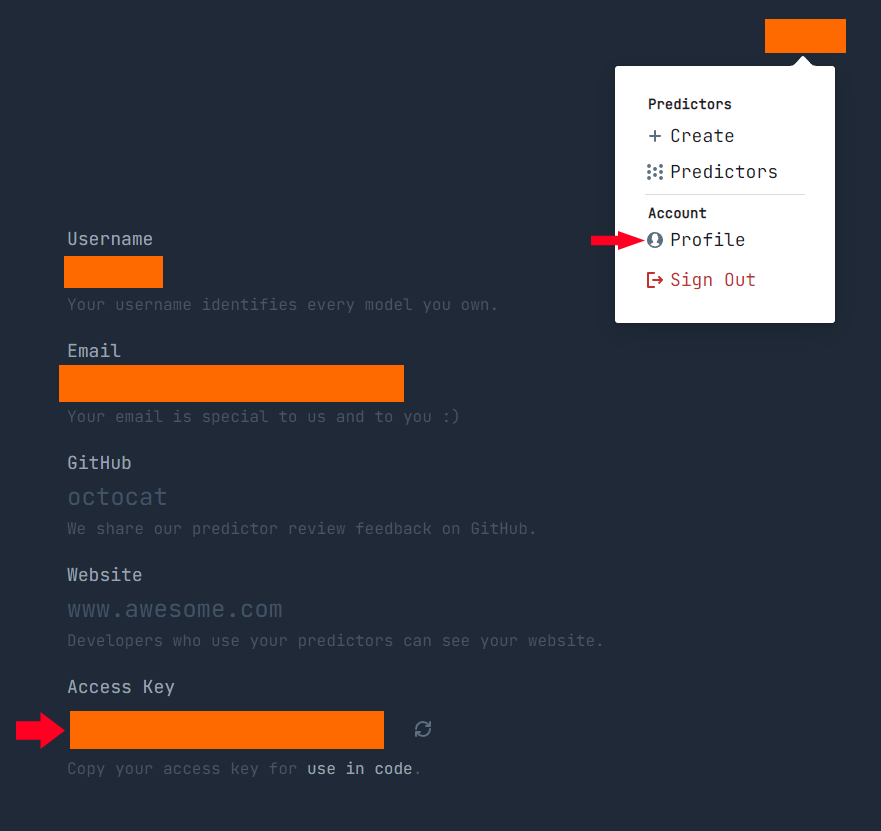

To be able to actually use NatML Hub, an account there is required. Once you have signed up, you will not only be able to download the model data you need, but also be able to upload your own ONNX models.

Also make sure to copy your NatML Hub access key, as you will need to pass it as a parameter for authentication, when downloading a model directly from the hub. Not doing so will result in an error, even for public models.

// Pass the access key to FromHub() to authenticate

var modelData = await MLModelData.FromHub("@natsuite/tiny-yolo-v3", accessKey);Local Models vs. Hub Models

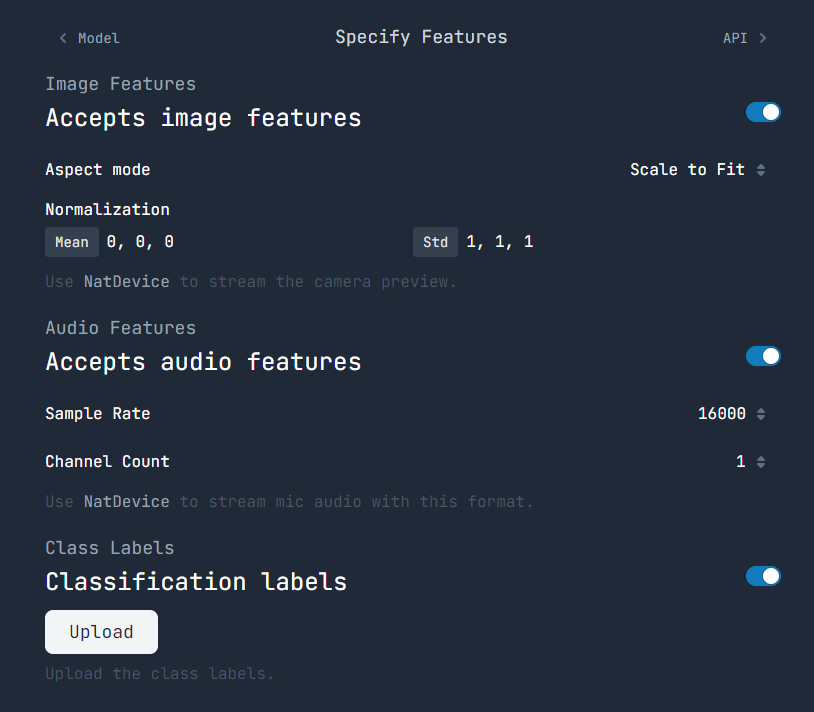

When uploading an ONNX model to NatML Hub, there are several additional options that can be set for the model. These settings are directly linked to the model you can download from the hub at runtime and will translate to the returned MLModelData object that is returned.

In case you want to directly package your model file with your application, you can do so by using one of the two provided methods. However, the model settings that we just mentioned will not be set in the returned MLModelData object, as they are not included in the original ONNX model file itself. You will instead have to set these explicitly, if you do not wish for the default settings to be set.

As of the time I am writing this blog, the properties containing these settings are readonly, so you cannot directly set them. However, using the debugger we can find the underlying object fields and set them using reflection, when loading the model data. The following shows an example for setting the aspect mode to MLImageFeature.AspectMode.AspectFit instead of its default setting MLImageFeature.AspectMode.ScaleToFit.

var t = typeof(MLModelData);

var field = t.GetField("_aspectMode", System.Reflection.BindingFlags.NonPublic | System.Reflection.BindingFlags.Instance);

field.SetValue(model2Data, MLImageFeature.AspectMode.AspectFit);Using a NatML Machine Learning Model

To actually use the model, we will now have to build a predictor from the model instance we just created. Predictors are the actual wrappers around the machine learning model that will act as a bridge between our Unity C# code and the model itself.

A predictor’s job is to:

- translate the model input from C# types to values in memory that the model can understand and use

- marshal the model output written to some location in memory and translate it into a usable representation for our application

For custom models, you might have to write your own predictor based on the interface of your machine learning model. We might cover the process of writing your own predictor in another blog post. For the tiny-yolo-v3 model, there already exists the TinyYOLOv3Predictor that is available on NatML Hub.

Each model on NatML Hub requires you to specify a matching predictor at the time of upload. Thus, when using a model directly from NatML Hub, you should be able to also download a working predictor from the model’s hub page. In the case of tiny-yolo-v3 just follow this link and click the Get button. This will download a .unitypackage file including the TinyYOLOv3Predictor (as well as a complete sample scene with sample usage code) for you to import to your project.

Once the import is completed, you will now be able to use the TinyYOLOv3Predictor in your code.

// Create a predictor for the model

var predictor = new TinyYOLOv3Predictor(model);

// Prepare the predictor input

var inputFeature = new MLImageFeature(someTexture2D);

(inputFeature.mean, inputFeature.std) = modelData.normalization;

inputFeature.aspectMode = modelData.aspectMode;

// Make prediction on an image

var detections = predictor.Predict(inputFeature);By calling the Predict() method and passing the required input, the chosen model will be executed and its result returned. In the case of tiny-yolo-v3, we pass an image in form of a texture object and get an array of rectangles describing the positions of recognized objects in the image, as well as their names and confidence.

Please note that the Predict() method result and parameters will differ for each predictor, depending on the actual machine learning model that is used and its interface.

Testing your Application

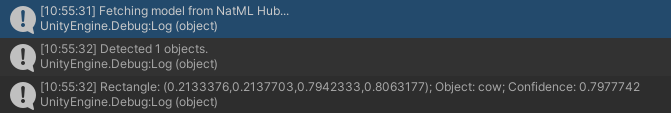

To test whether or not our machine learning model can be successfully used, we will only need to pass an image of our choice to the Predict() call and check out the results.

// Create input feature

...

// Detect

var detections = predictor.Predict(inputFeature);

// Inspect results

Debug.Log($"Detected {detections.Length} objects.");

foreach(var obj in detections)

{

Debug.Log($"Rectangle: ({obj.rect.xMin},{obj.rect.yMin},{obj.rect.xMax},{obj.rect.yMax}); Object: {obj.label}; Confidence: {obj.score}");

}The result should look somewhat like this:

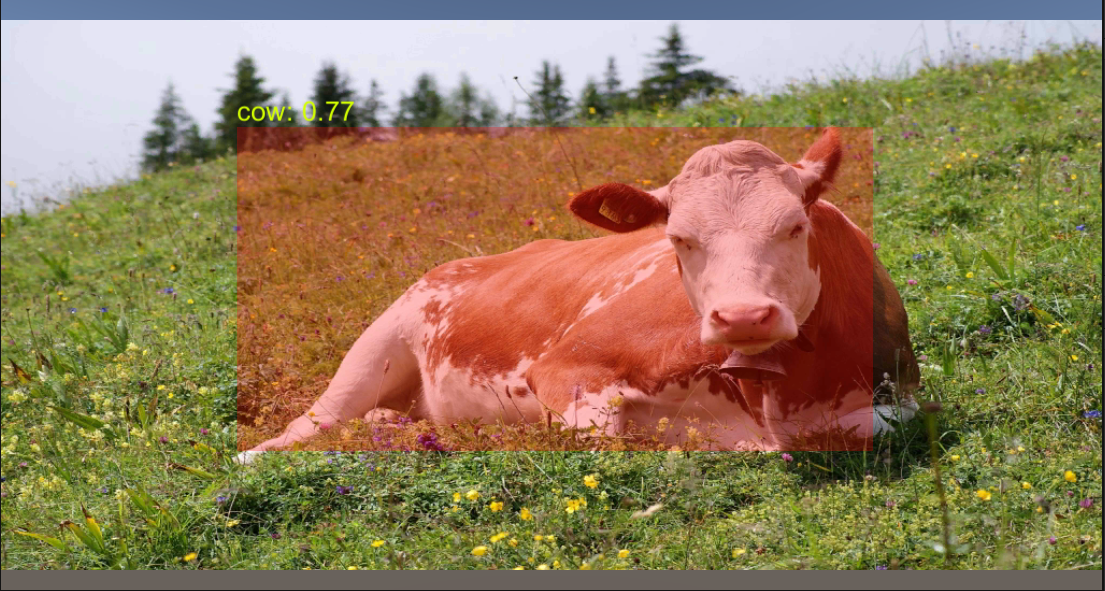

The .unitypackage that you have downloaded earlier includes a nice sample scene, displaying the detected objects in a more visually appealing way. If you would like, you can also check out that example. It is going to look somewhat like this:

Conclusion

Based on my experiences up to this point, NatML is a great runtime for building ML enabled Unity applications. The installation process is very easy and the models provided in the NatML Hub can be used right away. Even custom models can be integrated after investing some effort into the implementation of a matching predictor class.

NatML is still a very young project, so obviously some issues may arise when working with it. However, due to NatML’s ease of use and the its active development we expect to be using it a lot in future machine learning related projects.